AI skills assessment platform – Measure AI talent

| Tempo di lettura:

The gap between AI ambition and capability has never been wider. Demand for AI talent outstrips supply by 3.2:1, with some specializations facing shortages above 50% of required headcount. This isn’t a problem hiring alone can solve.

AI skills assessment gives organizations a clear, data-driven view of their workforce’s actual capabilities versus what’s needed to execute their AI strategy. Rather than relying on resumes or credentials, you can measure technical proficiency, implementation readiness, ethical awareness, and strategic thinking through structured frameworks that reveal both strengths and critical gaps.

Why AI skills assessment matters in 2026

Skills requirements in roles highly exposed to AI are changing 66% faster than in other jobs, more than 2.5 times the pace seen just one year earlier. The share of job postings requiring at least one AI skill rose from 0.5% in 2010 to around 1.7% in 2024, with a sharp 31% jump from 2023 to 2024. Unique postings mentioning generative AI skills grew from dozens in early 2021 to nearly 10,000 by May 2025, with non-IT roles showing roughly 9× growth in generative AI skill requirements between 2022 and 2024.

AI skills assessment provides the foundation for informed talent decisions in this environment. It reduces bias in hiring and development, ensuring candidates are evaluated on demonstrated abilities rather than resume keywords. For existing employees, assessment creates a baseline for personalized development and identifies high-potential internal candidates for AI-focused roles.

Around 78% of organizations now use AI, up from 55% a year earlier. Yet lack of in-house AI skills and leadership expertise remains one of the top barriers to scaling AI and realizing productivity gains. Assessment helps quantify that skills gap and transforms it from a vague concern into a manageable problem with specific targets.

SkillPanel approaches AI skills assessment through comprehensive skills mapping that goes beyond surface-level testing. The platform evaluates thousands of digital and IT skills through multi-source assessments that combine self-evaluations, peer reviews, manager ratings, and hands-on technical challenges, creating an objective, holistic view of each person’s capabilities.

Core AI competencies to evaluate

Effective AI skills assessment requires evaluating competencies across technical, strategic, and human-centered dimensions. Organizations should focus on a focused set of broad areas that appear consistently in frameworks from universities, standards bodies, and industry consortia.

Technical AI literacy

Technical AI literacy forms the foundation for anyone working with or around AI systems. This means understanding core concepts like machine learning, generative models, neural networks, and training data, along with their limitations and failure modes. People need to interpret model outputs critically, recognize when AI is appropriate for a task, and communicate effectively about AI capabilities and risks.

Data fluency sits at the heart of technical literacy. Professionals should be comfortable reading charts, understanding data quality issues, recognizing potential sources of bias in datasets, and asking the right questions about privacy, security, and representativeness.

Assessment should test whether people can explain AI concepts clearly, identify red flags in AI system outputs, and make sound judgments about AI tool selection for specific use cases through scenario-based evaluation.

Applied AI implementation skills

Implementation skills bridge the gap between understanding AI conceptually and putting it to work. This includes proficiency in using AI tools to redesign everyday tasks, from effective prompt engineering to chaining multiple AI capabilities into useful workflows. People should demonstrate competence in monitoring AI system performance, troubleshooting issues, and iterating to improve results.

For roles working directly with technical teams, applied skills include translating business problems into AI-amenable use cases and evaluating feasibility, value, and risk. Technical roles require deeper capabilities in building, fine-tuning, and integrating AI systems, covering data pipelines, model selection and evaluation, deployment strategies, and MLOps practices.

A skills assessment platform like SkillPanel evaluates implementation skills through practical, hands-on challenges that mirror real work tasks, measuring how people solve realistic technical problems under conditions similar to actual job requirements.

AI ethics and governance knowledge

The ethical dimension of AI competency has moved from optional awareness to critical requirement. Professionals across roles need the ability to identify and mitigate ethical, legal, and societal risks including bias, transparency failures, accountability gaps, safety issues, and compliance violations.

Assessment should evaluate whether people can spot potential bias in training data or model outputs, understand when and how to document AI system decisions for auditability, recognize situations requiring human oversight, and escalate concerns about misuse through appropriate channels.

Organizations in regulated industries face particularly acute needs around AI ethics and governance capabilities. Healthcare systems report that while AI is embedded in imaging, documentation, and decision support, most clinicians lack formal training in AI fundamentals, data governance, and evaluation of AI tools. Financial services face persistent gaps in explainable AI, data ethics, and AI governance frameworks, with many institutions reporting that governance and compliance skills are now as difficult to hire as pure data science capabilities.

Strategic AI thinking

Strategic thinking about AI involves recognizing how these technologies can advance business objectives and making informed decisions about where to invest resources. Professionals should identify opportunities for AI integration, articulate potential impact on organizational strategy, and evaluate tradeoffs between different approaches.

CHROs interviewed in 2025 emphasize that AI is fundamentally changing how talent levels are defined. One leader noted that “a level 2 engineer with AI is starting to look like a level 4,” underscoring that evaluation now must consider how effectively employees augment themselves with AI rather than only their standalone expertise. This shifts assessment from static competency models to dynamic evaluations of how people leverage AI to take on more complex work.

Leaders require the ability to sponsor AI initiatives effectively by setting clear success criteria, allocating appropriate resources, removing organizational barriers, and making course corrections based on early results.

Human-AI collaboration capabilities

The future of work involves humans and AI systems working together, each contributing their distinctive strengths. As Josh Bersin and other workforce analysts note, as AI handles more routine and purely technical tasks, organizations must assess and hire for uniquely human capabilities such as creativity, empathy, and emotional intelligence.

Assessment should evaluate whether people can integrate AI tools into their work while strengthening rather than outsourcing distinctly human skills. Change readiness forms another crucial dimension, including learning agility, willingness to reskill, comfort with ambiguity, and capacity to work in cross-functional teams actively reshaping roles and workflows around AI.

How to conduct your AI skills assessment

Conducting effective AI skills assessment requires combining multiple evaluation methods to build a complete picture of individual and organizational capabilities. A single approach provides limited insight and introduces systematic blind spots.

Self-assessment framework

Self-assessment allows individuals to reflect on their own AI competencies against clear benchmarks and identify areas where they recognize gaps. A well-structured framework should present specific, concrete skill descriptions rather than vague categories, enabling people to rate themselves accurately against defined proficiency levels.

The value lies primarily in building awareness and ownership of development. People who participate in honest self-evaluation tend to be more motivated to address gaps because they’ve explicitly acknowledged them. However, research consistently shows that people struggle to assess their own competence accurately, particularly at low skill levels. Organizations should treat self-assessment as one input into a broader evaluation process.

A structured framework like the CompTIA AI Essentials approach helps establish baseline AI literacy by enabling learners to explain basic generative AI concepts, distinguish AI assistants from traditional software, apply AI to simple work tasks with attention to data privacy and responsible use, and validate completion through competency assessment.

Using structured assessment tools

Structured assessment tools provide standardized, objective evaluation of AI skills across your organization. These tools should test both knowledge and practical application through scenario-based questions, hands-on exercises, case study analysis, and real-world problem-solving tasks.

For technical AI skills, assessment tools should present realistic challenges that require building, modifying, or troubleshooting AI systems rather than just answering multiple-choice questions. Candidates might be asked to clean and prepare a dataset, select and justify appropriate model architectures, implement a simple machine learning pipeline, or debug existing code.

For non-technical roles, tools should evaluate practical proficiency with AI tools used in daily work, ability to craft effective prompts and refine outputs, judgment about when to use AI versus other approaches, and understanding of ethical considerations and limitations.

SkillPanel’s approach to skills testing software combines technical evaluations with assessments of applied competencies and behavioral capabilities. The platform enables organizations to customize assessments to specific roles and technology stacks, ensuring evaluation aligns with actual job requirements. Through its RealLifeTesting methodology, SkillPanel measures how candidates solve realistic problems, providing more predictive insight into on-the-job performance.

Gathering practical evidence

Practical evidence of AI skills comes from real-world application and outcomes. This includes project portfolios demonstrating AI implementation work, case studies showing how someone used AI to solve business problems, performance metrics quantifying impact of AI-powered improvements, and peer feedback on collaboration and technical judgment.

For technical roles, evidence might include contributions to AI system development visible through code repositories, documentation of model experiments and iteration, participation in model reviews and technical discussions, or presentations explaining AI approaches to non-technical stakeholders. For business roles, evidence could include process improvements achieved through AI adoption, successful change management for AI tool rollout, or identification of high-value AI use cases.

Gathering this evidence requires establishing clear documentation practices and feedback mechanisms as part of normal workflow. Regular retrospectives, project close-out reports, and peer review sessions create natural opportunities to capture concrete examples of AI skill application.

Common implementation challenges

Organizations rolling out AI skills assessments face recurring obstacles that have less to do with the AI models themselves and more to do with change management, data governance, and system integration. Understanding these challenges and their proven mitigation strategies accelerates successful implementation.

Unclear goals and weak stakeholder alignment often derail programs that launch without tight definitions of what “AI skills” mean for specific roles or how assessment results will be used. Vague rubrics and unclear use cases lead to low perceived relevance and pushback from managers who see assessments as extra work disconnected from business outcomes. Successful organizations counter this by starting with a small set of priority roles, mapping AI tasks to business KPIs, and co-designing the framework with line managers and HR while documenting explicit use cases for results. Running a short pilot to validate that scores correlate with on-the-job performance provides evidence to secure broader buy-in.

Low trust and fear of surveillance creates high employee resistance when assessments feel like monitoring or a pretext for layoffs. Workers report concerns that AI skills data may be used to rank individuals, automate performance management, or justify offshoring work. Effective mitigation includes clear, written guarantees on what the assessment will and will not be used for, transparent communication of scoring logic, sharing aggregate results with teams rather than only leadership, and framing assessments as part of funded upskilling pathways with budgeted training and time allocations.

Data, privacy, and compliance constraints often stall implementations when organizations cannot safely use real internal data for assessment tasks, or when sending content to external AI services creates regulatory and contractual risks. Common obstacles include data residency requirements and client confidentiality. Successful approaches involve building anonymization pipelines to strip sensitive details from assessment documents, hosting workflows within existing secure environments, and involving legal and data protection teams early to co-author clear data sheets spelling out data flows, retention durations, and individuals’ rights.

Integration and operationalization failures plague organizations that run successful assessments but fail to connect results into HRIS, LMS, and career frameworks, leaving insights in spreadsheets while enthusiasm fades. Practitioners report “pilot purgatory” where scores exist but don’t affect hiring, learning plans, or staffing decisions. Successful implementations define specific downstream automations before launch (score thresholds that auto-enroll employees in learning paths or populate skill profiles in talent marketplaces), integrate assessment data into existing systems and rituals like performance reviews, and assign operational ownership with clear roadmaps for refresh cycles.

Interpreting your assessment results

Raw assessment scores provide limited value without clear benchmarks and frameworks for interpretation. Organizations need structured approaches to understanding what results mean, where individuals and teams stand relative to requirements, and which gaps deserve immediate attention.

Understanding skill level benchmarks

Skill level benchmarks establish a common language for discussing proficiency. Rather than vague categories like “beginner” and “advanced,” effective benchmarks describe specific capabilities people can demonstrate at each level. For AI skills, you might define levels such as awareness (understands concepts and terminology), functional (can use AI tools for routine tasks with guidance), proficient (applies AI independently to solve problems), and expert (designs AI solutions and guides others).

Each level should include concrete examples of what people can do, not just what they know. A proficient level for prompt engineering might mean “consistently crafts effective prompts that achieve desired outputs within 2-3 iterations, troubleshoots issues systematically, and applies prompt patterns appropriately across different AI tools.”

External benchmarks from industry sources provide additional context, but internal benchmarks tied to your specific AI use cases and technology choices often prove more useful for development planning because they reflect what people actually need to succeed in their roles at your organization.

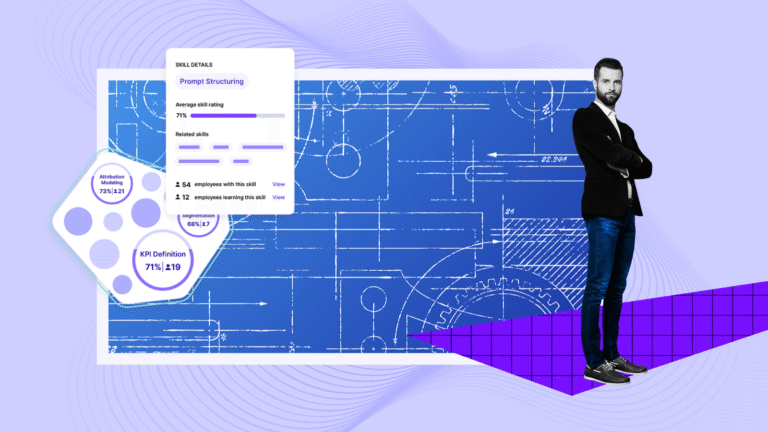

SkillPanel’s skills intelligence platform uses structured skills ontologies and a comprehensive library of digital and IT skills to establish consistent, granular benchmarks across the workforce. The platform helps organizations compare current proficiency against target levels for future roles and market standards, providing clear guidance about development priorities.

Identifying priority development areas

Not all skills gaps deserve equal attention. Priority development areas are those where closing gaps will have the greatest impact on organizational AI capability and business outcomes, considering strategic importance, current gap size, number of people affected, and feasibility of improvement.

Start by mapping assessment results against your AI strategy and roadmap. Which skills are most critical for the use cases you’re pursuing now and planning for next year? Where do current capability levels fall short of what’s required? A skill might be important in abstract terms but not actually limiting your progress if you have sufficient capability or can access it through partnerships.

Consider the distribution of skills across your workforce. A skill gap affecting five specialists might be less urgent than one affecting fifty managers who need basic AI literacy to make sound decisions about adoption. However, a critical technical capability missing entirely from your organization, even in a small team, may block progress on high-value initiatives and become top priority.

Also weigh development timelines realistically. Some skills can be built quickly through focused training and practice, while others require extended experience or background knowledge. Your priority list should balance impact with achievability, targeting gaps where you can make meaningful progress on reasonable timelines.

Creating your AI skills development plan

Assessment results gain value when they drive action. A comprehensive AI skills development plan translates identified gaps into concrete learning initiatives, resource allocation, and progress tracking mechanisms.

Setting realistic learning goals

Effective learning goals follow SMART principles while accounting for practical realities of building new capabilities alongside ongoing work. Goals should specify what someone will be able to do differently, not just what topics they will study.

For a business analyst developing AI implementation skills, a realistic goal might be “apply generative AI tools to automate three repetitive analysis tasks by end of quarter, documenting productivity gains and lessons learned.” For a manager building AI literacy, it could be “evaluate AI use cases proposed by team members using a structured assessment framework covering business value, technical feasibility, and ethical considerations, with evidence of application in monthly project reviews.”

Anthony Abbatiello, Workforce Transformation Leader and Principal at PwC US, argues that CHROs must “own the workforce end to end,” including designing how human skills and AI agent skills are combined, then managing both as part of a single talent system. From his perspective, success requires moving beyond narrow technical tests toward holistic assessment of how people and AI agents together deliver outcomes across recruitment, development, and retention.

Timeline expectations should reflect the skill’s complexity and how much dedicated learning time is realistic given other responsibilities. Deep technical AI capabilities might require six to twelve months of consistent study and application, while practical proficiency with specific AI tools can often develop in weeks through regular use.

Choosing the right learning resources

The learning resources landscape for AI skills spans formal education, self-directed study, hands-on practice, mentorship, and community participation. Effective development plans typically combine multiple resource types rather than relying solely on courses.

Formal training programs and structured courses work well for building foundational knowledge and systematic understanding. Look for programs that emphasize hands-on application over pure theory, include real-world projects and examples, and provide feedback on learner work.

On-the-job learning through real projects remains the most powerful development mechanism when combined with appropriate support and guidance. Identify low-risk opportunities for people to apply emerging AI skills to actual work problems, with coaching available when they get stuck. Structure projects so people can demonstrate growing capability through concrete deliverables that contribute business value while advancing their learning.

SkillPanel integrates with online learning providers and supports centralized training requests, enabling organizations to connect assessment results directly to relevant development resources. The platform’s personalized development plans use AI-driven guidance to recommend specific learning paths tailored to each individual’s skill gaps, role requirements, and career aspirations.

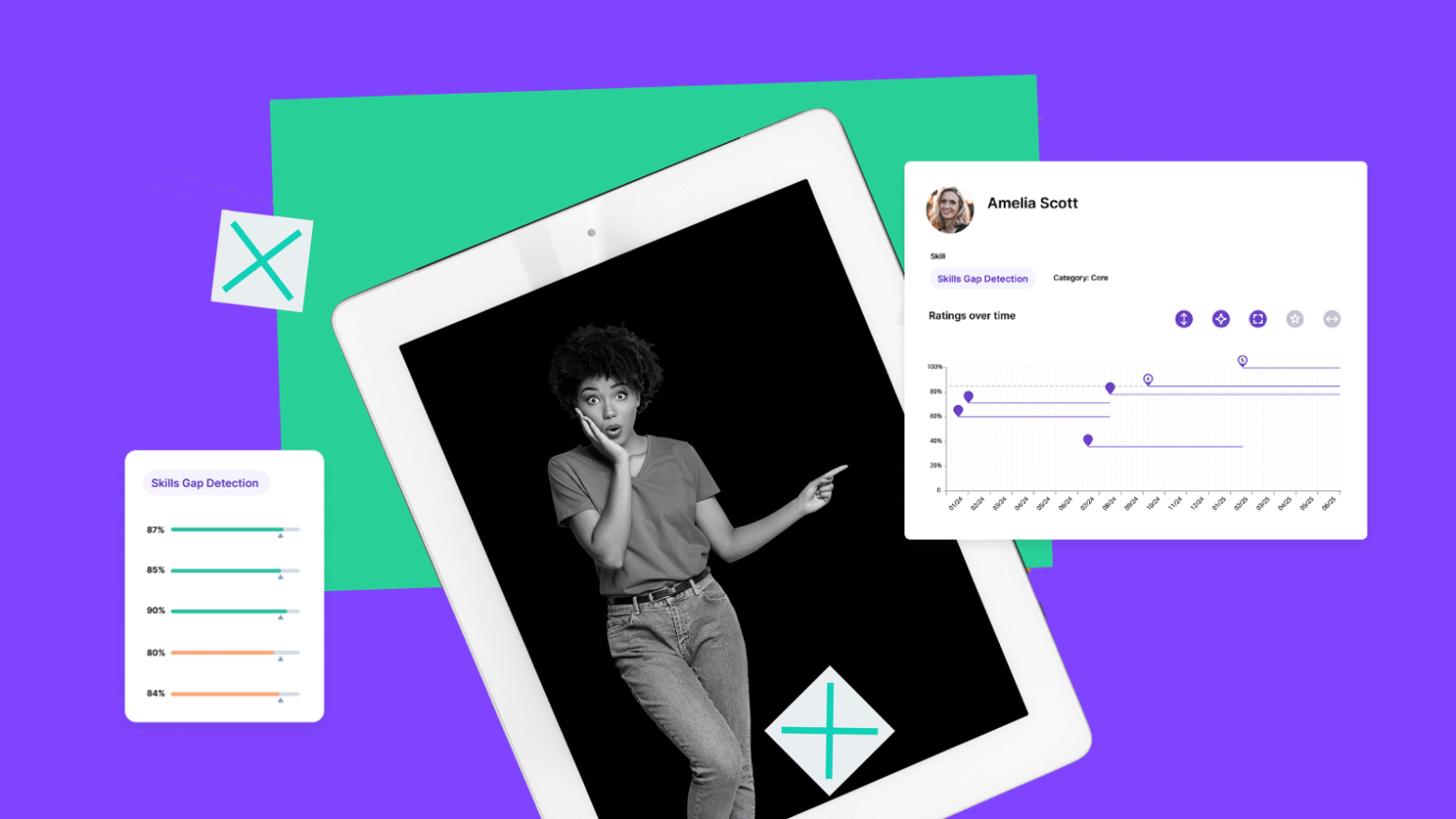

Measuring progress over time

Development without measurement becomes hope rather than strategy. Organizations should track skill progression systematically through periodic reassessment, application evidence, and business outcome metrics.

CHROs interviewed in 2025 emphasize that AI literacy should be evaluated through real-time, role-based performance rather than static courses or one-off certifications. They stress embedding assessment into daily workflows, looking at how engineers or marketers actually use AI tools to improve quality, speed, or decision-making, so AI proficiency becomes visible in business metrics rather than just training completion data.

Periodic reassessment using the same tools and benchmarks as baseline evaluation quantifies skill improvement and reveals whether development activities are working. Schedule reassessments at intervals that allow meaningful change to occur, typically quarterly for skills in active development.

Evidence of application through project work, code contributions, documented use cases, or process improvements provides complementary insight into how new skills are being deployed in real contexts. Track metrics like number of AI-powered automation implementations, AI tool adoption rates across teams, quality measures for AI-assisted work outputs, or time savings achieved.

SkillPanel’s real-time dashboards provide visibility into skill levels, gap closure, and development pathway progress across the workforce. The platform’s analytics support reporting on how skills initiatives affect hiring efficiency, internal mobility, and time-to-productivity.

Industry-specific AI skills requirements

While core AI competencies apply broadly, different industries face distinctive skill requirements shaped by their specific workflows, regulatory constraints, and value creation opportunities.

Healthcare teams face unique challenges as AI embeds into imaging, documentation, and decision support while most clinicians lack formal training in AI fundamentals. Recent analyses show strong growth in roles blending clinical expertise with data science, such as clinical data scientists and AI implementation leads, with demand substantially outpacing supply. Healthcare organizations need assessment focused on interpreting algorithm outputs in clinical context, recognizing AI system limitations that affect patient safety, and navigating regulatory requirements for AI-enabled medical devices.

Financial services face double-digit growth in demand for skills in machine learning, AI model validation, and model risk management. Assessment must emphasize model interpretability, audit trail documentation, bias detection in automated decisions, and alignment with regulatory expectations around model risk management.

Manufacturing sees rapid growth in postings requiring AI, machine learning, or advanced analytics skills for predictive maintenance, quality monitoring, and smart factory operations. Companies report difficulty reskilling existing technicians and engineers to work effectively with AI-driven systems. Skills assessment should evaluate ability to integrate AI insights with operational decision-making and troubleshoot AI-assisted equipment.

Retail organizations face strong increases in demand for data science, recommendation system, and marketing analytics skills as they expand AI-driven personalization and demand forecasting. Many report shortfalls in staff able to manage end-to-end data pipelines and productionize AI models at scale.

Building your AI capability foundation

SkillPanel’s approach transforms assessment data into action through AI-generated skill profiles built automatically from multiple sources, automated skills gap detection comparing current capabilities against target states, and personalized learning pathways that show each employee which skills are needed for target roles. This comprehensive skills assessment software supports decision-making at scale by providing visual, interactive workforce skills maps that help planners prioritize reskilling, upskilling, or strategic hiring.

AI skills assessment has evolved from optional diagnostic to strategic necessity. Success requires moving beyond resume reviews and self-reported competencies to systematic evaluation of technical proficiency, implementation readiness, ethical judgment, strategic thinking, and human-AI collaboration skills. Combining self-assessment, structured testing, and practical evidence through a comprehensive skill assessment platform provides the objective foundation for closing critical gaps through targeted development programs. Organizations that invest in robust AI skills assessment today build the talent advantage that will separate winners from laggards in an AI-driven future.