Learning and development analytics for HR leaders

| Reading time:

Learning and development analytics transforms how organizations understand and optimize their training investments. At its core, it captures, measures, and analyzes data about learner behaviors, training effectiveness, and business outcomes. This systematic approach enables HR leaders to answer critical questions: Which programs drive performance improvements? Where should training budgets flow? How do development initiatives impact retention and productivity?

The stakes have never been higher. The global workplace training market reached $401 billion in 2024, with organizations facing mounting pressure to prove value. Learning and development analytics provides that proof, connecting training activities to measurable business results through data-driven insights rather than assumptions.

The evolution from gut feeling to data-driven L&D

Training decisions once relied heavily on intuition and anecdotal feedback. L&D leaders selected programs based on vendor pitches, borrowed strategies from peers, or simply renewed last year’s initiatives. This approach left organizations vulnerable to wasted resources and missed opportunities.

Data availability changed everything. Learning management systems now capture detailed interaction patterns. HR platforms track career progression and performance metrics. Business intelligence tools connect training data to operational outcomes. This wealth of information enables precise measurement of what works and what doesn’t.

The shift mirrors broader business transformation. Just as marketing moved from creative guesswork to performance analytics, L&D now operates with similar rigor. Training analysts examine completion patterns, assess knowledge retention, and correlate learning activities with performance improvements. This evolution empowers HR leaders to optimize programs continuously, allocate budgets strategically, and speak the language of business impact.

When Analytics Investments Fail

Not all analytics initiatives succeed. Organizations under 200 employees often see better ROI from simple LMS reporting than expensive predictive platforms. Complex dashboards requiring dedicated analysts to interpret can languish unused when L&D teams lack statistical backgrounds. Before investing in advanced analytics, assess whether your organization has clean data, stakeholder buy-in, and realistic implementation capacity.

Business impact: How analytics transforms training ROI

Analytics fundamentally reshapes how organizations calculate and improve training returns. Traditional ROI calculations often stopped at cost-per-learner metrics. Modern learning analytics digs deeper, connecting training investments to revenue generation, productivity gains, and talent retention.

The business case proves compelling. Companies with formalized training programs yield 218% higher income per employee compared to those without structured development. More specifically, 42% of companies report that implementing eLearning has increased their revenue by improving workforce productivity and performance.

Analytics makes these improvements measurable and repeatable. Organizations track performance metrics before and after training interventions, isolating the specific impact of development programs. They identify which learning modalities deliver the strongest results for different skill types. They optimize content based on engagement data and knowledge retention assessments.

The retention impact alone justifies investment. 94% of employees state they would stay at a company longer if it invested in their learning and development. Analytics helps target these investments strategically, focusing resources where they deliver maximum retention value while supporting business-critical skill development.

The four types of learning analytics explained

Learning analytics encompasses four distinct analytical approaches, each serving unique purposes in optimizing training outcomes. Understanding these types helps HR leaders build comprehensive measurement strategies that go beyond surface-level reporting to drive continuous improvement.

Descriptive analytics: Understanding what happened

Descriptive analytics forms the foundation of any learning analytics strategy. This approach aggregates historical data to reveal patterns and trends in learner behavior. It answers fundamental questions about what occurred within training programs without explaining causation.

Modern platforms capture granular interaction data including login frequencies, assignment completion rates, quiz scores, and content interaction patterns. The resulting dashboards show which courses attract engagement, when learners typically access materials, and where completion rates excel or falter.

Most organizations begin their analytics journey here. 81% of L&D professionals place their organizations at basic measurement or data evaluation levels, primarily leveraging descriptive analytics. These capabilities provide essential visibility, showing that 4,000 people completed a course or that engagement dropped during specific content modules.

Diagnostic analytics: Discovering why it happened

Diagnostic analytics moves beyond simple observation to investigate root causes. This approach employs data mining, discovery techniques, and drill-down processes to uncover why specific trends emerged or anomalies occurred.

When completion rates drop for a particular course, diagnostic analytics explores potential explanations. Perhaps the content length exceeds learner attention spans. Maybe prerequisite knowledge gaps prevent progression. The course might be scheduled during high-workload periods. Diagnostic methods test these hypotheses against data.

This investigative approach identifies causal relationships in learner behavior. Organizations discover that mobile-accessible content drives different engagement patterns than desktop-only materials. 70% of learners feel more motivated when training on a mobile device compared to a computer, an insight diagnostic analytics helps contextualize within specific organizational patterns.

Common Diagnostic Pitfall: Organizations often mistake correlation for causation. Just because high performers complete more training doesn’t mean training created their performance. Strong diagnostic analytics requires controlled comparisons and careful interpretation, not just identifying patterns.

Predictive learning analytics: Forecasting future outcomes

Predictive learning analytics leverages statistical models, machine learning algorithms, and historical data to forecast future behaviors and trends. This forward-looking approach enables proactive intervention rather than reactive problem-solving.

Organizations use decision trees, neural networks, and regression techniques to identify learners likely to struggle before they encounter difficulties. These predictive models analyze patterns including initial assessment scores, engagement levels, and learning pace to flag at-risk individuals. Early warning systems then trigger personalized support interventions.

The forecasting extends beyond individual learners to organizational skill needs. Predictive models analyze industry trends, technology adoption patterns, and workforce demographics to anticipate future skill gaps. This foresight enables strategic training investments that prepare employees for upcoming challenges rather than scrambling to address gaps reactively.

Reality Check on Predictive Analytics: Real-time dashboards require substantial infrastructure. Organizations with legacy systems that can’t integrate data streams often spend 12-18 months cleaning and consolidating data before predictive models deliver value. Weekly reports using diagnostic analytics may provide better ROI for mid-market companies than pursuing predictive capabilities prematurely.

Prescriptive analytics: Recommending actions for improvement

Prescriptive analytics represents the most advanced analytical tier, generating specific recommendations to optimize learning outcomes. This approach doesn’t just predict what might happen but prescribes what actions to take for best results.

The technology suggests specific teaching methodologies, recommends educational resources tailored to individual needs, and proposes adaptive content adjustments. When analytics identify a struggling learner, prescriptive systems recommend the optimal intervention: additional practice exercises, alternative content formats, peer collaboration, or instructor support.

Prescriptive analytics also optimizes content strategy. Systems analyze which materials drive strongest knowledge retention for different topics and learner segments. They recommend content modifications, suggest optimal delivery timing, and propose personalized learning sequences that maximize efficiency. The result: tailoring learning paths with AI led to a 57% increase in learning efficiency and corresponding productivity improvements.

Essential learning and development metrics to track

Comprehensive measurement requires tracking metrics across three interconnected dimensions: engagement, performance, and business impact. Each category provides distinct insights that together create a complete picture of training effectiveness and ROI.

Engagement metrics

Engagement metrics reveal how learners interact with training content and whether programs capture sustained attention. These indicators serve as leading measures, often predicting downstream performance outcomes.

Course Completion and Drop Rates

Completion rates indicate whether training resonates sufficiently to retain learner attention through program conclusion. High completion rates suggest compelling content and appropriate difficulty levels. Conversely, elevated drop rates signal potential issues with relevance, complexity, or delivery format.

Tracking completion at granular levels reveals specific pain points. Organizations monitor not just final completion but progression through content modules, identifying where learners disengage. This detailed view enables targeted improvements rather than wholesale program overhauls.

Drop-off analysis proves equally valuable. Understanding when and why learners abandon training illuminates design flaws or misaligned expectations. Perhaps lengthy introductory modules lose attention before reaching valuable content. Maybe assessments feel disconnected from practical application.

Time Investment and Learning Pace

Time metrics reveal both efficiency and engagement depth. Organizations track total time invested per learner, average time-to-completion for courses, and pace variations across different content types.

Research shows study time for online learning is 40-60% less compared to traditional methods without sacrificing outcomes. Analytics help organizations realize these efficiency gains while ensuring compressed timelines don’t compromise learning quality.

Learning pace analysis identifies outliers requiring attention. Learners progressing unusually quickly might skip important content or possess prerequisite knowledge suggesting alternative placements. Those progressing slowly may need additional support or prerequisite training.

The scheduling dimension matters too. 68% of employees say they’d rather learn at work integrated into their workday instead of personal time. Analytics showing when learners access training inform scheduling decisions that boost participation and completion.

Learner Satisfaction and Feedback Scores

Satisfaction metrics capture subjective learner experience through surveys, ratings, and qualitative feedback. While perceptions don’t always correlate perfectly with learning outcomes, satisfaction influences engagement levels and program reputation.

Post-training surveys typically assess content relevance, instructor effectiveness, platform usability, and overall value. Organizations track Net Promoter Scores measuring whether learners would recommend programs to colleagues.

Qualitative feedback provides context that quantitative scores lack. Open-ended responses reveal specific strengths and weaknesses, suggest content improvements, and identify technical issues affecting experience.

Performance metrics

Performance metrics connect training directly to capability improvements and behavioral change. These measures prove whether learning translates into enhanced job performance and skill development.

Knowledge Retention and Assessment Results

Assessment scores provide objective measurement of knowledge acquisition. Pre-training assessments establish baselines, post-training evaluations measure immediate gains, and delayed assessments test retention over time.

Ongoing skills assessments track employee progress through learning paths and knowledge levels, measuring true impact on job performance rather than just immediate recall. This longitudinal approach distinguishes temporary memorization from durable skill development.

Assessment design significantly impacts measurement validity. Organizations increasingly adopt scenario-based evaluations and practical demonstrations over multiple-choice tests. These performance-based assessments better predict real-world capability and reveal whether learners can apply knowledge in context.

Skills Application and Behavioral Change

True learning impact manifests through changed workplace behaviors. Organizations track whether trained employees actually apply new skills in their roles and whether these applications drive better outcomes.

Observation protocols, manager evaluations, and performance reviews capture behavioral change. Organizations measure frequency of skill application, quality of execution, and consistency over time.

Skills application data often reveals gaps between knowledge acquisition and practical use. Learners might demonstrate understanding in assessments yet fail to apply skills on the job. This gap points to barriers like insufficient practice opportunities, lack of managerial support, or organizational processes that discourage new approaches.

Performance Improvement Post-Training

Performance metrics before and after training enable precise ROI calculation on development programs. Organizations compare productivity measures, quality indicators, and efficiency metrics for trained versus untrained employees or pre-training versus post-training periods.

The performance connection proves financially significant. Online learning can boost employee retention by as much as 80% while improving employee performance by 15-25%. These dual benefits compound over time, creating substantial organizational value.

Tracking also reveals differential impact across learner segments. Perhaps training delivers stronger results for certain roles, experience levels, or baseline skill ranges. These insights enable targeting that maximizes training efficiency by focusing resources where they generate highest returns.

Business impact metrics

Business impact metrics connect learning investments to organizational outcomes that matter to executives. These top-line measures demonstrate how training contributes to strategic priorities and financial performance.

Training ROI and Cost Per Learner

ROI calculations compare training costs against measurable benefits. Comprehensive approaches factor development expenses, delivery costs, learner time investment, and administrative overhead against performance improvements, retention gains, and productivity increases.

The cost perspective matters too. The average cost per learning hour used in 2024 was $165, representing a 34% increase from 2023’s average. Understanding these costs per learner helps optimize delivery methods and identify efficiency opportunities.

ROI varies significantly by training type and delivery method. Research shows online training returns $30 on every $1 invested thanks to quicker skill application and reduced training time. Analytics help organizations shift investments toward highest-ROI modalities while maintaining effectiveness.

Employee Retention and Promotion Rates

Development opportunities significantly reduce employee turnover. Analytics tracking retention rates for trained versus untrained employees quantify this impact. Organizations also measure whether specific programs correlate with stronger retention in high-turnover roles or departments.

Promotion rates provide another retention-linked metric. Organizations typically see two to three times more promotions among participants of workforce education programs. This internal mobility both retains talent and fills leadership pipelines more efficiently than external hiring.

The retention economics prove compelling. Replacing employees costs 50-200% of annual salary depending on role complexity. Even modest retention improvements driven by effective L&D quickly justify program investments through avoided turnover costs alone.

Productivity and Revenue Impact

Productivity metrics connect training to operational efficiency and output quality. Organizations track production rates, error reductions, customer satisfaction improvements, and cycle time decreases following relevant training interventions.

Revenue attribution presents greater complexity but offers powerful impact demonstration. Sales training might correlate with revenue per representative. Customer service training could link to retention rates and customer lifetime value. Technical training may enable new service offerings or product capabilities.

Data sources: What to measure in your L&D programs

Comprehensive learning analytics requires integrating data from multiple sources to create complete visibility into training effectiveness and business impact. Organizations must identify relevant data streams and establish collection mechanisms that capture meaningful information without overwhelming learners or administrators.

Learning platform data and LMS analytics

Learning management systems serve as the foundational data source, tracking learner engagement, course completion rates, assessment scores, and time spent on training modules. This data provides essential visibility into how employees interact with learning content and where programs succeed or struggle.

Modern LMS platforms capture granular interaction data including video viewing patterns, content navigation paths, discussion participation, and resource downloads. This detailed behavioral data reveals engagement quality beyond simple completion metrics, showing whether learners actively process content or rush through to claim credit.

Assessment data from LMS platforms measures knowledge acquisition through quizzes, tests, practical exercises, and project submissions. Pre and post-training assessments enable measurement of specific skill gains attributable to training interventions rather than general experience or external learning.

Business performance data

Linking L&D initiatives to business performance data helps organizations assess training impact on key outcomes. Seamless integrations with CRM systems and internal communication platforms enable tracking of cross-functional learning adoption and its influence on operational results.

Sales data, customer satisfaction scores, production metrics, quality indicators, and efficiency measures all provide context for training effectiveness. Organizations analyze performance trends before and after training deployments, comparing trained versus untrained employees to isolate training impact.

Project outcomes and deliverable quality offer additional performance indicators. Organizations track whether trained employees complete projects faster, produce higher-quality work, or contribute more innovative solutions.

HR and talent management data

HR information systems connect L&D platforms to enable automated enrollments, track employee demographics, job roles, tenure, and performance classifications. This integration provides essential context for analyzing training effectiveness across different employee segments and organizational contexts.

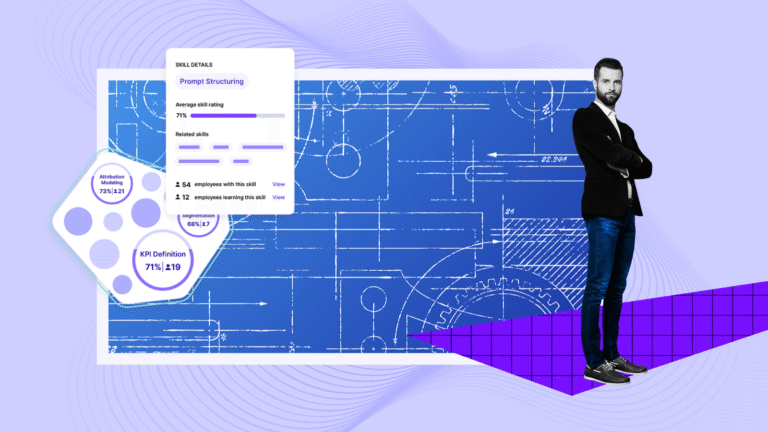

Performance management system data reveals whether training correlates with improved performance ratings, goal achievement, and competency assessments. Skills dashboards map current capabilities against organizational needs, identifying gaps that training should address.

Career progression data including promotions, lateral moves, and succession planning outcomes demonstrate whether training enables advancement. Organizations track time-to-promotion for trained employees compared to peers, measuring whether development accelerates career growth.

External benchmarking data

Comparing internal L&D metrics with industry benchmarks provides valuable context for performance assessment. External data helps organizations identify whether their results represent excellence requiring sustainment or underperformance demanding improvement.

Industry associations and research organizations publish benchmarks for completion rates, learner satisfaction, training costs per employee, and development hours per capita. These comparisons reveal how organizational L&D capabilities stack up against peers and competitors.

Best practice research from leading organizations provides models for emulation. Case studies detailing training innovations and their impacts help organizations identify opportunities to enhance their own programs by adapting proven approaches from similar contexts.

How to implement learning analytics in your organization

Successful implementation requires systematic planning that aligns analytics capabilities with organizational needs and readiness. Organizations should approach implementation incrementally, building capabilities progressively rather than attempting comprehensive deployment immediately.

Step 1: Define your L&D analytics strategy and goals

Begin by identifying specific business questions analytics should answer. Rather than collecting data indiscriminately, focus on addressing real challenges like understanding why turnover increases in technical teams or evaluating learning and development investment effectiveness.

Establish clear analytical objectives tied to business priorities. Perhaps leadership needs visibility into skill gaps blocking strategic initiatives. Maybe compliance requirements demand training completion tracking. Different stakeholders need different insights, so comprehensive strategy considers multiple perspectives.

Assess your organization’s analytics maturity realistically. Organizations just beginning their analytics journey should start with basic reporting and compliance tracking before attempting predictive modeling. Understanding current capabilities helps set appropriate initial goals.

Critical Reality Check: Define specific problems analytics will solve before implementation begins. Without clear goals—whether skill gap analysis, improving L&D ROI, or introducing personalized learning—even advanced analytics programs fail to deliver meaningful impact. Start with the questions, then build tools to answer them.

Step 2: Establish data collection and integration processes

Effective analytics requires systematic data collection and integration across multiple systems. Organizations must conduct comprehensive inventories of existing HR data sources including HRIS platforms, applicant tracking systems, performance management tools, learning management systems, engagement surveys, and compensation systems.

Prioritize interoperability and system integration from the start. Analytics solutions must integrate seamlessly with learning management systems, student information systems, advising platforms, and other relevant technologies. This integration eliminates manual data transfers while ensuring consistency and timeliness.

Establish a clear data taxonomy aligned with institutional goals. Define specific learning data elements, including engagement metrics, course-level outcomes, and demographic factor,s rather than collecting data haphazardly. This structured approach ensures collected data actually addresses priority questions.

Data Quality Pitfall: Poor data quality undermines analytical validity regardless of technological sophistication. Organizations often discover legacy systems can’t export clean data, requiring extensive manual cleanup. Budget 30-40% of implementation time for data quality remediation before expecting useful insights.

Step 3: Build analytics dashboards and reporting systems

Create role-based dashboard views tailored to different stakeholder groups. Executives need high-level ROI and impact metrics presented concisely. Managers require granular visibility into team performance and skill gaps. Learners benefit from personalized progress tracking and development recommendations.

Prioritize clear visual communication through appropriate visualization types. KPI cards and trend lines work well for executive dashboards. Progress bars and heat maps effectively communicate learner insights. Bar charts with scatter plots help analyze content performance patterns.

Start small by focusing on 2-3 core KPI categories rather than attempting comprehensive measurement immediately. Begin with essential metrics like completion rates, learner satisfaction, and basic performance outcomes. Gradually expand analytical capabilities as organizational maturity and stakeholder comfort increase.

Foster an analytics-driven culture by training L&D teams on dashboard interpretation and data-informed decision practices. Technical tools deliver value only when users possess skills to extract insights and translate findings into program improvements.

Step 4: Train your team and create an analytics culture

Upskill HR teams for data literacy by developing capabilities in statistics, data visualization, analytical thinking, and translating data insights into business recommendations. This capability development proves essential for effectively using workforce analytics tools and interpreting insights meaningfully.

Provide hands-on training with selected analytics platforms and dashboards. Theoretical understanding means little without practical experience navigating systems, running reports, and interpreting results. Structured training programs should include real scenario practice and guided exploration.

Establish feedback loops for ongoing monitoring and refinement of analytics initiatives. Measure the impact of decisions made using analytical insights, creating accountability for data-driven approaches while identifying areas where analytics add most value.

Deploy targeted pilot projects to identify specific skill development needs rather than attempting organization-wide implementation immediately. Focus on defined goals within one department or a few courses initially. This approach manages risk while building organizational confidence through early successes.

Real-world implementation: Navigating challenges and achieving results

While statistics demonstrate analytics potential, organizations face predictable obstacles during implementation. Understanding common challenges and realistic timelines helps set appropriate expectations.

Manufacturing company scenario: When data integration fails

A 3,000-person manufacturing company struggling with 40% turnover in technical roles invested in predictive analytics to identify at-risk employees. Initial implementation failed because legacy production systems couldn’t integrate with the new HR analytics platform.

The team spent 8 months building API connections and cleaning inconsistent data across five disconnected systems. Only after this infrastructure work could they begin actual analysis. The lesson: assess technical readiness before purchasing advanced analytics. Organizations with fragmented legacy systems should either budget for extensive integration work or start with simpler analytics within existing platforms.

Eventually, after 18 months total, the manufacturer achieved results: turnover in technical roles dropped to 28% while training costs decreased 15% through better-targeted development programs.

Mid-market retail scenario: Starting too advanced

A 1,200-person retail organization jumped directly to prescriptive analytics with AI-powered learning recommendations. The L&D team lacked statistical backgrounds to validate model outputs or explain recommendations to skeptical managers.

After six months and $150K invested, the analytics platform sat largely unused. Managers didn’t trust “black box” recommendations they couldn’t understand, reverting to intuition-based decisions.

The company reset by implementing basic LMS-integrated reporting first. They spent three months building comfort with descriptive analytics—completion rates, time-to-finish, satisfaction scores. Only after establishing data literacy and trust did they gradually introduce diagnostic capabilities.

The revised approach took longer but created sustainable change. After 12 months, managers routinely consulted dashboards before program decisions, and completion rates improved 22% through targeted interventions based on diagnostic insights.

Key lessons from failed implementations

Organizations consistently encounter these pitfalls:

- Insufficient executive sponsorship: Analytics initiatives require ongoing investment and organizational change. Without executive champions defending budgets and driving adoption, programs stall when competing priorities emerge.

- Misalignment with business goals: Starting with available data rather than business questions generates interesting but useless reports. Define specific decisions analytics should inform before building measurement systems.

- Underestimating change management: New dashboards threaten managers comfortable with intuition-based decisions. Plan for resistance and invest in change management, not just technical implementation.

- Overcomplicating initial deployment: Most organizations spend 12-18 months at Level 2 analytics before predictive capabilities deliver ROI. Trying to skip maturity levels leads to expensive failures.

Advanced applications: Predictive analytics and AI in L&D

Artificial intelligence and predictive analytics represent the frontier of learning and development capabilities. These technologies enable personalization at scale, proactive intervention, and optimization impossible through manual approaches.

Identifying at-risk learners before they fail

Predictive models analyze patterns in engagement data, assessment performance, and learning pace to identify individuals likely to struggle before failure occurs. Early warning systems flag these at-risk learners for proactive support interventions.

Machine learning algorithms detect subtle patterns human observers miss. Perhaps sporadic login patterns, declining assessment scores, or increasing time between activities signal impending disengagement. Predictive systems recognize these warning signs and trigger responses.

Research demonstrates AI-enabled personalization systems can predict student performance with 87.3% accuracy when provided sufficient historical data. This predictive precision enables confident intervention decisions that allocate support resources efficiently.

Trade-off Reality: Predictive systems require 12+ months of clean historical data before achieving useful accuracy. Organizations lacking this data foundation see better results from simple diagnostic analytics identifying current strugglers than investing in predictive capabilities that won’t work yet.

Personalizing learning paths with predictive models

AI-driven algorithms create customized learning paths for employees based on individual strengths, weaknesses, learning preferences, and career goals. This tailored approach enhances engagement while improving training outcomes through relevant, appropriately challenging content.

Coursera’s AI capabilities earned maximum scores in The Forrester Wave for Technology Skills Development Platforms, recognizing advanced personalization enabling learners to receive content recommendations aligned with individual needs and organizational goals.

IBM’s Watson Career Coach evaluates employees’ skills and aspirations to provide personalized career recommendations, achieving a 20% reduction in turnover rates while providing employees with clearer growth paths. This AI-driven guidance helps employees see development opportunities aligned with interests.

Results prove significant. Tailoring learning paths with AI led to a 57% increase in learning efficiency with corresponding productivity improvements as learners focus on most relevant content rather than generic curricula.

Forecasting future skill gaps and training needs

Predictive analytics helps organizations anticipate future capability requirements before gaps create operational challenges. By analyzing industry trends, technology adoption patterns, and workforce demographics, systems forecast which skills will become critical and when.

Unilever uses predictive analytics to align workforce skills with future roles, resulting in a 15% increase in employee satisfaction while creating more agile talent management. This proactive approach ensures capability availability when business needs evolve.

The forecasting extends to individual career planning. AI systems analyze employee skills, interests, and organizational needs to predict career trajectories and recommend development preparing employees for future opportunities. This dual benefit supports both organizational capability planning and individual career growth.

AI-powered recommendations for content optimization

Machine learning analyzes learner interaction data and performance outcomes to identify which content drives strongest knowledge retention for different topics and audience segments. These insights enable continuous content improvement based on effectiveness data rather than subjective assessments.

AI systems recommend content modifications including format adjustments, length optimization, delivery method changes, or difficulty calibration. Perhaps video content outperforms text for certain concepts. Maybe microlearning modules drive better retention than lengthy courses for specific skills.

The optimization impact proves substantial. Organizations using AI-powered recommendations see 60% improvements in knowledge retention when employing AI-powered learning tools. Content optimization ensures training investments deliver maximum capability development per dollar and hour invested.

Creating effective learning reports for stakeholders

Report effectiveness depends on tailoring content and format to stakeholder needs and priorities. Executives require different information than managers, who need different insights than learners. Successful reporting recognizes these variations and delivers relevant information in appropriate formats.

Executive dashboards: Communicating ROI to leadership

Leadership dashboards prioritize high-level metrics demonstrating training’s business impact. Focus on ROI calculations for training, performance improvement percentages, changes in retention rates, and productivity gains. Executives need quick understanding of whether L&D investments deliver value without detailed operational metrics.

Visual clarity matters enormously at executive levels. KPI cards showing current performance against targets enable rapid status assessment. Trend lines demonstrate trajectory over time. Comparison charts position organizational performance against benchmarks or historical baselines.

Connect metrics directly to strategic priorities. If leadership focuses on revenue growth, show how training correlates with sales performance. If retention challenges top the agenda, emphasize development’s impact on turnover rates. This strategic alignment demonstrates L&D’s contribution to priority outcomes.

Manager reports: Tracking team development progress

Managers need operational visibility into team member development, skill progression, and training completion. Reports should highlight which employees completed required training, identify skill gaps affecting team performance, and flag individuals requiring support or recognition.

Include comparison data showing team performance against organizational averages or departmental peers. Managers benefit from understanding whether their teams excel or struggle relative to broader patterns. This context informs whether issues require local intervention or reflect systemic challenges.

Provide actionable insights rather than just data. If reports show declining completion rates, include potential explanations and suggested interventions. When skills gaps emerge, recommend specific training resources addressing deficiencies.

Learner insights: Individual progress and recommendations

Individual learner reports should emphasize personal progress, achievement recognition, and development recommendations. Show completed courses, skills gained, assessment performance, and advancement toward learning goals. This visibility helps learners track development and stay motivated.

Provide personalized recommendations for next development steps based on career interests, current skill levels, and organizational opportunities. AI-driven suggestions identify relevant courses, projects, or experiences advancing individual goals while supporting organizational needs.

Comparison data should focus on self-referenced progress rather than peer rankings. Showing personal improvement over time proves more motivating than competitive positioning for most learners. However, anonymized cohort comparisons can provide useful context about typical progression rates.

Overcoming common learning analytics challenges

Organizations encounter predictable obstacles implementing learning analytics programs. Understanding these challenges and proven mitigation strategies accelerates successful deployment while avoiding common pitfalls.

Data quality and integration issues

Data quality problems undermine analytical validity regardless of technological sophistication. Missing data, inconsistent formats, duplicate records, and outdated information all compromise insight accuracy. Organizations must establish robust data validation rules and conduct regular audits maintaining analytics accuracy.

Integration challenges emerge when systems fail to communicate effectively. Learning platforms, HR systems, and business intelligence tools often use incompatible data structures or lack standard integration protocols. Prioritizing interoperability during platform selection prevents subsequent integration headaches.

Establish clear data taxonomy aligned with institutional goals. Define specific learning data elements organizations will track including engagement metrics, course-level outcomes, and demographic factors. This structured approach ensures collected data actually addresses priority analytical questions rather than accumulating unused information.

Privacy concerns and ethical data use

Learning analytics raises legitimate privacy concerns about data collection scope, usage transparency, and protection against misuse. Employees may worry that performance tracking could negatively impact careers or that organizations might use learning data punitively.

Organizations must implement comprehensive data governance policies at the organizational level. Convene stakeholders including HR, IT, legal, and employee representatives to define institutional goals for learning analytics, set governance policies, and ensure alignment with privacy regulations and organizational values.

Maintain transparency about what data gets collected, how organizations use it, and who accesses it. Clear communication about data practices alleviates concerns while demonstrating respect for employee privacy. Emphasize how analytics support individual development rather than surveillance.

Building analytics capability without data scientists

Many organizations lack dedicated data science resources, making sophisticated analytics seem unattainable. However, modern analytics platforms increasingly democratize capabilities through user-friendly interfaces requiring minimal technical expertise.

Upskill existing HR teams rather than hiring expensive specialists. Develop capabilities in statistics, data visualization, analytical thinking, and translating data insights into business recommendations. This approach builds sustainable internal capacity while proving more cost-effective than external hiring.

Select tools matching organizational analytical maturity. Platforms designed for non-technical users enable effective analytics without advanced statistical knowledge. Focus on a defined goal in a targeted pilot project within one department or a few courses rather than attempting organization-wide implementation immediately.

Moving from vanity metrics to meaningful insights

Organizations often track easily measured metrics like login counts or course launches without considering whether these “vanity metrics” predict meaningful outcomes. High activity levels mean little if learners don’t retain knowledge or apply skills.

Prioritize metrics providing actionable insights rather than superficial data points. Effective analytics should transform assessment data into actionable insights for workforce management strategy, moving beyond surface-level engagement metrics to demonstrate concrete connections between training investments and organizational performance improvements.

Connect learning metrics to business outcomes. Track whether training participants demonstrate improved performance, achieve higher productivity, or progress faster in careers. These outcome-focused metrics prove training value more convincingly than activity measures.

Building your L&D analytics maturity roadmap

Organizations progress through predictable maturity stages developing learning analytics capabilities. Understanding this progression helps set realistic expectations while providing a roadmap for continuous improvement.

Level 1: Basic reporting and compliance tracking

Initial maturity focuses on fundamental data collection and reporting primarily serving compliance needs. Organizations track which employees completed mandatory training, measure basic participation rates, and document training hours for regulatory requirements.

Analytics at this level answer simple descriptive questions about what happened. Reports show that 4,000 people completed a course or that 85% of employees finished required safety training. 37% of L&D professionals place their organizations at this basic measurement level.

While limited, this foundation proves essential for subsequent maturity development. Establishing consistent data collection processes and basic reporting systems creates infrastructure supporting more sophisticated analysis. Organizations shouldn’t skip this stage attempting premature advanced analytics.

Level 2: Performance analysis and benchmarking

Organizations advance by evaluating data to understand whether outcomes are positive or negative. Analysis reveals that more people completed courses than expected or that satisfaction scores declined from previous periods. 36% of L&D professionals place their organizations at this data evaluation level.

Benchmarking against industry standards provides context for performance assessment. External comparisons reveal whether organizational results represent excellence or underperformance relative to peers.

Internal performance analysis explores relationships between training and business outcomes. Organizations examine whether trained employees demonstrate better performance, whether development programs correlate with retention, or whether specific training modalities deliver superior results.

Maturity Milestone Criteria: Move from Level 1 to Level 2 when you have 12+ months of clean completion data, stakeholders regularly request performance comparisons, and compliance tracking alone no longer justifies analytics investment. Expect to spend 6-9 months at Level 2 building diagnostic capabilities before attempting predictive analytics.

Level 3: Predictive insights and optimization

Advanced maturity leverages predictive analytics to forecast future outcomes and optimize programs proactively. Organizations investigate relationships within data to understand why outcomes occurred and what will likely happen next.

Predictive models identify at-risk learners before they fail, forecast future skill gaps, and anticipate training needs before they become critical. This forward visibility enables strategic interventions and resource allocation rather than reactive problem-solving.

Only 19% of L&D professionals place their organizations at advanced or predictive levels, reflecting the significant capability leap required. Organizations at this maturity possess strong data infrastructure, analytical expertise, and cultural commitment to data-driven decision-making.

Critical Success Factors for Level 3: Most organizations spend 12-18 months at Level 2 before predictive capabilities deliver ROI. Prerequisites include integrated data systems, clean historical data spanning 2+ years, L&D team members with statistical training, and executive sponsorship for ongoing investment.

Level 4: Prescriptive intelligence and continuous improvement

The highest maturity level uses data to predict and prescribe future outcomes based on understanding what drives positive results. Prescriptive systems automatically implement optimization strategies when launching new priority training based on proven patterns.

AI and machine learning enable real-time adaptive systems that continuously refine themselves based on new data. These systems create continuous improvement cycles that enhance effectiveness over time as job requirements evolve.

Organizations at this level embed analytics deeply into L&D operations rather than treating it as a separate reporting function. Data informs every decision from content development to delivery method selection to resource allocation.

Turning analytics into action: Your next steps

Understanding learning and development analytics means little without translating insights into concrete improvements. Organizations must move beyond data collection and reporting to systematic action driving measurable enhancement in training effectiveness and business impact.

Begin by defining clear analytical objectives addressing specific business challenges. Identify questions requiring answers: Why does turnover increase in technical teams? How effectively do learning investments impact performance? Which development programs deliver strongest ROI?

Assess your data infrastructure honestly. Conduct comprehensive inventories of existing HR data sources including HRIS platforms, learning management systems, performance management tools, and engagement surveys. Identify gaps requiring attention and develop strategies improving data quality through standardized collection and governance frameworks.

Select analytics tools matching your current maturity level. Organizations beginning their journey should start with simpler solutions requiring less technical expertise. For organizations ready to move beyond basic LMS reporting, SkillPanel provides AI-driven skills intelligence with real-time workforce capability dashboards, automated gap analysis, and personalized development recommendations focused specifically on talent strategy and workforce planning.

Deploy targeted pilots demonstrating value before broad implementation. Focus on defined goals within one department or a few courses initially. These manageable projects build organizational confidence through early successes while identifying challenges requiring attention before enterprise deployment.

Establish feedback loops measuring the impact of decisions made using analytical insights. This accountability creates continuous improvement cycles while demonstrating analytics value to skeptical stakeholders. Track whether data-driven decisions actually improve outcomes compared to previous intuition-based approaches.

Build data literacy across L&D teams through training in statistics, data visualization, analytical thinking, and translating insights into business recommendations. Technical tools deliver value only when users possess skills extracting meaning and converting findings into program improvements.

Most importantly, foster a culture valuing evidence over assumptions. Challenge decision-making based solely on intuition or tradition. Demand data supporting proposed initiatives while remaining open to insights contradicting established practices. This cultural shift toward data-driven L&D represents the foundation enabling all other improvements.

Learning and development analytics has evolved from nice-to-have reporting to essential strategic capability. Organizations leveraging data effectively optimize training investments, demonstrate measurable business impact, and develop workforce capabilities more efficiently than competitors relying on intuition. The question isn’t whether to adopt learning analytics but how quickly organizations can build capabilities positioning them for success in an increasingly data-driven business environment.